Security | Threat Detection | Cyberattacks | DevSecOps | Compliance

May 2023

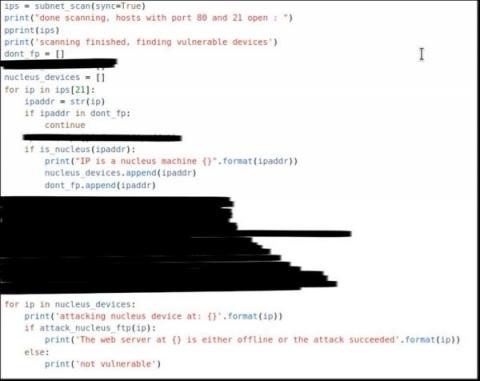

AI-Assisted Attacks Are Coming to OT and Unmanaged Devices - the Time to Prepare Is Now

Malicious code is not difficult to find these days, even for OT, IoT and other embedded and unmanaged devices. Public exploit proofs-of-concept (PoCs) for IP camera vulnerabilities are routinely used by Chinese APTs, popular building automation devices are targeted by hacktivists and unpatched routers used for Russian espionage.

Introducing Charlotte AI, CrowdStrike's Generative AI Security Analyst: Ushering in the Future of AI-Powered Cybersecurity

CrowdStrike has pioneered the use of artificial intelligence (AI) since we first introduced AI-powered protection to replace signature-based antivirus over 10 years ago, and we’ve continued to deeply integrate it across our platform since. We combine the best in technology with the best of human expertise to protect customers and stop breaches.

Top Tips to Secure Your Organization from Cybercrime in Today's World | ChatGPT

Battle of the AI: Defensive Cybersecurity vs. Malicious Cyber Criminals

[Mastering Minds] China's Cognitive Warfare Ambitions Are Social Engineering At Scale

As the world continues to evolve, so does the nature of warfare. China's People's Liberation Army (PLA) is increasingly focused on "Cognitive Warfare," a term referring to artificial intelligence (AI)-enabled military systems and operational concepts. The PLA's exploration into this new domain of warfare could potentially change the dynamics of global conflict.

The Role of AI in Cybersecurity: Will it Replace Human Professionals?

Colliding with the Future: The Disruptive Force of Generative AI in B2B Software

Over the past few months, our collective fascination with AI has reached unprecedented heights, leading to an influx of information and discussions on its potential implications. It seems that wherever we turn, AI dominates the conversation. AI has captivated the imaginations of tech enthusiasts, researchers, and everyday individuals alike. At the tender age of 11, I received my very first computer, the legendary ZX Spectrum. Looking back, it's hard to believe how much has changed since then.

An Explainer for how AI and Low-Code/No-Code are Friends, not Foes

In today’s rapidly evolving digital landscape, organizations not only seek out, but need to harness the power of emerging technologies to stay ahead of the competition. Two of the most promising trends in the tech world are generative AI and low-code/no-code development. Generative AI, in particular, has generated the majority of the headlines, with seemingly infinite use cases to spur productivity for end users and business.

Teleport Assist - GPT-4 powered DevOps assistant.

ChatGPT Reveals Top 5 Cybersecurity Concerns for Businesses

Greatest Threats to Businesses Today: Insights by ChatGPT

ChatGPT and Cato: Get Fish, Not Tackles

ChatGPT is all the rage these days. Its ability to magically produce coherent and typically well-written, essay-length answers to (almost) any question is simply mind-blowing. Like any marketing department on the planet, we wanted to “latch onto the news.” How can we connect Cato and ChatGPT? Our head of demand generation, Merav Keren, made an interesting comparison between ChatGPT and Google Search.

AI on offense: Can ChatGPT be used for cyberattacks?

Coffee Talk with SURGe: Volt Typhoon, CosmicEnergy, Pentagon Cyber Strategy, AI Risk

Unlocking the Secrets of Spanish Slang: A Closer Look at Cybersecurity Lingo

Build a Culture and Governance to Securely Enable ChatGPT and Generative AI Applications-Or Get Left Behind

No sooner did ChatGPT and the topic of generative artificial intelligence (AI) go mainstream than every enterprise business technology leader started asking the same question. Is it safe?

The intersection of telehealth, AI, and Cybersecurity

Artificial intelligence is the hottest topic in tech today. AI algorithms are capable of breaking down massive amounts of data in the blink of an eye and have the potential to help us all lead healthier, happier lives. The power of machine learning means that AI-integrated telehealth services are on the rise, too. Almost every progressive provider today uses some amount of AI to track patients’ health data, schedule appointments, or automatically order medicine.

AI-generated Disinformation Dipped The Markets Yesterday

The Insider reported that an apparently AI-generated photo faking an explosion near the Pentagon in D.C. went viral. The Arlington Police Department confirmed that the image and accompanying reports were fake. But when the news was shared by a reputable Twitter account on Monday, the market briefly dipped. The photo was spread by dozens of accounts on social media, including RT, a Russian state-media Twitter account with more than 3 million followers — but the post has since been deleted.

CrowdStrike Advances the Use of AI to Predict Adversary Behavior and Significantly Improve Protection

Since CrowdStrike’s founding in 2011, we have pioneered the use of artificial intelligence (AI) and machine learning (ML) in cybersecurity to solve our customers’ most pressing challenges. Our application of AI has fit into three practical categories.

Sharing your business's data with ChatGPT: How risky is it?

As a natural language processing model, ChatGPT - and other similar machine learning-based language models - is trained on huge amounts of textual data. Processing all this data, ChatGPT can produce written responses that sound like they come from a real human being. ChatGPT learns from the data it ingests. If this information includes your sensitive business data, then sharing it with ChatGPT could potentially be risky and lead to cybersecurity concerns.

CISO Matters: Rise of the Machines - A CISO's Perspective on Generative AI

How ChatGPT is Changing Our World

The Artificial intelligence (AI) based language model, ChatGPT, has gained a lot of attention recently, and rightfully so. It is arguably the most widely popular technical innovation since the introduction of the now ubiquitous smart speakers in our homes that enable us to call out a question and receive an instant answer. But what is it, and why is it relevant to cyber security and data protection?

Learn about Corelight and Zeek with AI

UTMStack Unveils Ground-breaking Artificial Intelligence to Revolutionize Cybersecurity Operations

Doral, Florida UTMStack, a leading innovator in cybersecurity solutions, has announced a significant breakthrough in the field of cybersecurity – an Artificial Intelligence (AI) system that performs the job of a security analyst, promising to transform cybersecurity practices forever.

Will predictive AI revolutionize the SIEM industry?

The cybersecurity industry is extremely dynamic and always finds a way to accommodate the latest and best technologies available into its systems. There are two major reasons: one, because cyberattacks are constantly evolving and organizations need to have the cutting edge technologies in place to detect sophisticated attacks; and two, because of the complexity of the network architecture of many organizations.

Six Key Security Risks of Generative AI

Generative Artificial Intelligence (AI) has revolutionized various fields, from creative arts to content generation. However, as this technology becomes more prevalent, it raises important considerations regarding data privacy and confidentiality. In this blog post, we will delve into the implications of Generative AI on data privacy and explore the role of Data Leak Prevention (DLP) solutions in mitigating potential risks.

Hype vs. Reality: Are Generative AI and Large Language Models the Next Cyberthreat?

How to secure Generative AI applications

I remember when the first iPhone was announced in 2007. This was NOT an iPhone as we think of one today. It had warts. A lot of warts. It couldn’t do MMS for example. But I remember the possibility it brought to mind. No product before had seemed like anything more than a product. The iPhone, or more the potential that the iPhone hinted at, had an actual impact on me. It changed my thinking about what could be.

Netskope Demo: Keep Source Code out of AI Tools like ChatGPT

Watershed Moment for Responsible AI or Just Another Conversation Starter?

The Biden Administration’s recent moves to promote “responsible innovation” in artificial intelligence may not fully satiate the appetites of AI enthusiasts or defuse the fears of AI skeptics. But the moves do appear to at least start to form a long-awaited framework for the ongoing development of one of the more controversial technologies impacting people’s daily lives. The May 4 announcement included three pieces of news.

Cloudflare Equips Organisations with the Zero Trust Security They Need to Safely Use Generative AI

AI can crack your passwords (and other very old news)

Artificial intelligence (AI) made a larger-than-usual splash recently when word broke of an AI-powered password cracker. I have a bit of AI fatigue, but these stories immediately grabbed my attention — they had me at “passwords.”

CTI Roundup: Hackers Use ChatGPT Lures to Spread Malware on Facebook

CISA issues a joint advisory on Russia’s Snake malware operation, hackers use ChatGPT lures to spread malware on Facebook, and a new phishing-as-a-service tool appears in the wild.

Who is Securing the Apps Built by Generative AI?

The rise of low-code/no-code platforms has empowered business professionals to independently address their needs without relying on IT. Now, the integration of generative AI into these platforms further enhances their capabilities and eliminates entry barriers. However, as everyone becomes a developer, concerns about security risks arise.

60 Second Charity Challenge: Regulating Artificial Intelligence

The Face Off: AI Deepfakes and the Threat to the 2024 Election

The Associated Press warned this week that AI experts have raised concerns about the potential impact of deepfake technology on the upcoming 2024 election. Deepfakes are highly convincing digital disinformation, easily taken for the real thing and forwarded to friends and family as misinformation. Researchers fear that these advanced AI-generated videos could be used to spread false information, sway public opinion, and disrupt democratic processes.

The 443 Episode 242 - An Interview with ChatGPT

A complete suite of Zero Trust security tools to help get the most from AI

Cloudflare One gives teams of any size the ability to safely use the best tools on the Internet without management headaches or performance challenges. We’re excited to announce Cloudflare One for AI, a new collection of features that help your team build with the latest AI services while still maintaining a Zero Trust security posture.

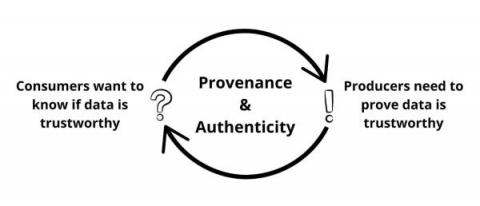

In the age of AI, how do you know what data to trust?

Last week, the godfather of AI, Geoffrey Hinton smashed the glass and activated the big red AI alarm button warning all of us about creating a world where we won’t “be able to know what is true anymore”. What’s happening now with everything AI makes all the other tech revolutions of the past 40 plus years seem almost trivial.

Netskope Demo - Safely Enable ChatGPT

Modern Data Protection Safeguards for ChatGPT and Other Generative AI Applications

Co-authored by Carmine Clementelli and Jason Clark In recent times, the rise of artificial intelligence (AI) has revolutionized the way more and more corporate users interact with their daily work. Generative AI-based SaaS applications like ChatGPT have offered countless opportunities to organizations and their employees to improve business productivity, ease numerous tasks, enhance services, and assist in streamlining operations.

Coffee Talk with SURGe!

Artificial intelligence might be insulting your intelligence

It’s Saturday morning. You’ve decided to sleep in after last night’s bender, and you can’t be bothered about the sound of your phone ringing. You decide to brush it off and go back to sleep, but the phone won’t stop ringing. You wake up and scan your surroundings. Your wife’s missing. You let the phone ring until it’s silent and bury your head in your pillow to block out the splitting headache that’s slowly building up. A single message tone goes off.

Introducing Nightfall AI for Zendesk | AI-Powered Cloud Data Leak Prevention (DLP)

AI-generated security fixes in Snyk Code now available

Finding and fixing security issues in your code has its challenges. Chief among them is the important step of actually changing your code to fix the problem. Getting there is a process: sorting through security tickets, deciphering what those security findings mean and where they come from in the source code, and then determining how to fix the problem so you can get back to development. Not to worry — AI will take care of everything, right?

Protecto Finds Overexposed Sensitive Data in Databricks

Key takeaways from RSA 2023: #BetterTogether and AI in security

Whether or not you made it to RSA 2023, here are two key themes we saw throughout this year’s conference.

ChatGPT Data Breach Break Down

Consolidation, Flexibility, ChatGPT, & Other Key Takeaways from Netskopers at RSA Conference 2023

At RSA Conference 2023, a number of Netskopers from across the organization who attended the event in San Francisco shared commentary on the trends, topics, and takeaways from this year’s conference.

Does Chat GPT Know Your Secrets?

Answering Key Questions About Embracing AI in Cybersecurity

As we witness a growing number of cyber-attacks and data breaches, the demand for advanced cybersecurity solutions is becoming critical. Artificial intelligence (AI) has emerged as a powerful contender to help solve pressing cybersecurity problems. Let’s explore the benefits, challenges, and potential risks of AI in cybersecurity using a Q&A composed of questions I hear often.

Can AI write secure code?

AI is advancing at a stunning rate, with new tools and use cases are being discovered and announced every week, from writing poems all the way through to securing networks. Researchers aren’t completely sure what new AI models such as GPT-4 are capable of, which has led some big names such as Elon Musk and Steve Wozniak, alongside AI researchers, to call for a halt on training more powerful models for 6 months so focus can shift to developing safety protocols and regulations.

Trustwave Answers 11 Important Questions on ChatGPT

ChatGPT can arguably be called the breakout software introduction of the last 12 months, generating both amazement at its potential and concerns that threat actors will weaponize and use it as an attack platform. Karl Sigler, Senior Security Research Manager, SpiderLabs Threat Intelligence, along with Trustwave SpiderLabs researchers, has been tracking ChatGPT’s development over the last several months.

AI, Cybersecurity, and Emerging Regulations

The SecurityScorecard team has just returned from an exciting week in San Francisco at RSA Conference 2023. This year’s theme, “Stronger Together,” was meant to encourage collaboration and remind attendees that when it comes to cybersecurity, no one goes it alone. Building on each other’s diverse knowledge and skills is what creates breakthroughs.

The role of AI in healthcare: Revolutionizing the healthcare industry

The content of this post is solely the responsibility of the author. AT&T does not adopt or endorse any of the views, positions, or information provided by the author in this article.