Security | Threat Detection | Cyberattacks | DevSecOps | Compliance

January 2024

Why Identity is the Cornerstone of a Zero Trust Architecture

Introducing NIST AI RMF: Monitor and mitigate AI risk

Demo Tuesday: AI Assist

Celebrating new milestones plus enterprise-ready features and more AI capabilities

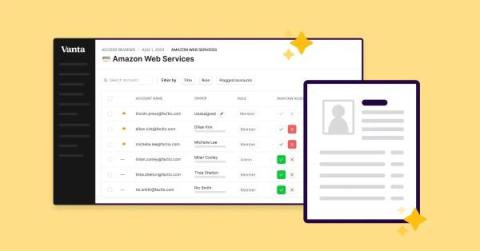

Introducing AI Data Import for Access Reviews

Data-Driven Decisions: How Energy Software Solutions Drive Efficiency

How to steal intellectual property from GPTs

Forget Deepfake Audio and Video. Now There's AI-Based Handwriting!

Five worthy reads: Making AI functionality transparent using the AI TRiSM framework

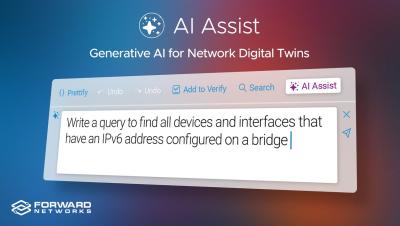

AI Assist | Feature Announcement

Retail in the Era of AI: An Industry Take on Splunk's 2024 Predictions

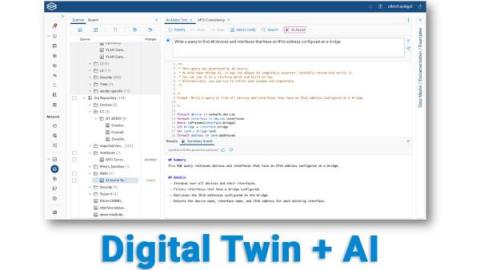

Forward Networks Unveils Generative AI Features and Strategic Roadmap for Digital Twin Platform

How Elastic AI Assistant for Security and Amazon Bedrock can empower security analysts for enhanced performance

NCSC Warns That AI is Already Being Used by Ransomware Gangs

Feature Announcement: AI Assist

Forward Networks Delivers First Generative AI Powered Feature

Future of VPNs in Network Security for Workers

Four Takeaways from the McKinsey AI Report

Use of Generative AI Apps Jumps 400% in 2023, Signaling the Potential for More AI-Themed Attacks

How Cloudflare's AI WAF proactively detected the Ivanti Connect Secure critical zero-day vulnerability

Cato Taps Generative AI to Improve Threat Communication

Making Sense of AI in Cybersecurity

Navigating the New Waters of AI-Powered Phishing Attacks

Fake Biden Robocall Demonstrates the Need for Artificial Intelligence Governance Regulation

AI Does Not Scare Me, But It Will Make The Problem Of Social Engineering Much Worse

3 tips from Snyk and Dynatrace's AI security experts

In AI we trust: AI governance best practices from legal and compliance leaders

What Existing Security Threats Do AI and LLMs Amplify? What Can We Do About Them?

Protecto - Data Protection for Gen AI Applications. Embrace AI confidently!

AI & Cybersecurity: Navigating the Digital Future

As we keep a close eye on trends impacting businesses this year, it is impossible to ignore the impacts of Artificial Intelligence and its evolving relationship with technology. One of the key areas experiencing this transformational change is cybersecurity. The integration of AI with cybersecurity practices is imperative, and it also demands a shift in how businesses approach their defenses.

Developing Enterprise-Ready Secure AI Agents with Protecto

Ultimate Guide to AI Cybersecurity: Benefits, Risks and Rewards

What do you get when you combine artificial intelligence (AI) and cybersecurity? If you answered with faster threat detection, quicker response times and improved security measures... you're only partially correct. Here's why.

AI and digital twins: A roadmap for future-proofing cybersecurity

Keeping up with threats is an ongoing problem in the constantly changing field of cybersecurity. The integration of artificial intelligence (AI) into cybersecurity is emerging as a vital roadmap for future-proofing cybersecurity, especially as organizations depend more and more on digital twins to mimic and optimize their physical counterparts.

2024 IT Predictions: What to Make of AI, Cloud, and Cyber Resiliency

The future is notoriously hard to see coming. In the 1997 sci-fi classic Men in Black — bet you didn’t see that reference coming — a movie about extraterrestrials living amongst us and the secret organization that monitors them, the character Kay, played by the great Tommy Lee Jones, sums up this reality perfectly: While vistors from distant galaxies have yet to make first contact — or have they? — his point stands.

Ethical Crossroads in AI: Unveiling Global Perspectives| Navigating the Dark Side of Technology

Implementing AI: Balancing Business Objectives and Security Requirements

Artificial Intelligence (AI) and machine learning have become integral tools for organizations across various industries. However, the successful adoption of these technologies requires a careful balance between business objectives and security requirements.

The Road Ahead: What Awaits in the Era of AI-Powered Cyberthreats?

Analysis of Phishing Emails Shows High Likelihood They Were Written By AI

It’s no longer theoretical; phishing attacks and email scams are leveraging AI-generated content based on testing with anti-AI content solutions. I’ve been telling you since the advent of ChatGPT’s public availability that we’d see AI’s misuse to craft compelling and business-level email content.

Navigating the AI Landscape: The Urgent Call for Transparent Martial Law | Razorthorn Security

The Hidden Costs of AI: Disruptions, Frauds, Job Loss, and the Looming Wage Depression

AI and security: It is complicated but doesn't need to be

Three Tips To Use AI Securely at Work

How can developers use AI securely in their tooling and processes, software, and in general? Is AI a friend or foe? Read on to find out.

AI and privacy - Addressing the issues and challenges

Artificial intelligence (AI) has seamlessly woven itself into the fabric of our digital landscape, revolutionizing industries from healthcare to finance. As AI applications proliferate, the shadow of privacy concerns looms large. The convergence of AI and privacy gives rise to a complex interplay where innovative technologies and individual privacy rights collide.

Navigating the Reality of AI: Debunking the AGI Myth and Addressing Current Challenges #podcast

How AI is transforming the future of trust

How is generative AI transforming trust? And what does it mean for companies — from startups to enterprises — to be trustworthy in an increasingly AI-driven world?

GPT Guard - A Step by Step Guide

7 Cybersecurity Predictions for 2024: An AI-Dominated Year

How Generative AI Will Accelerate Cybersecurity with Sherrod DeGrippo

Beyond Buzzwords: The Truth About AI

OpenAI's GPT Store: What to Know

Introducing Cloudflare's 2024 API security and management report

You may know Cloudflare as the company powering nearly 20% of the web. But powering and protecting websites and static content is only a fraction of what we do. In fact, well over half of the dynamic traffic on our network consists not of web pages, but of Application Programming Interface (API) traffic — the plumbing that makes technology work.

How to choose a security tool for your AI-generated code

“Not another AI tool!” Yes, we hear you. Nevertheless, AI is here to stay and generative AI coding tools, in particular, are causing a headache for security leaders. We discussed why recently in our Why you need a security companion for AI-generated code post. Purchasing a new security tool to secure generative AI code is a weighty consideration. It needs to serve both the needs of your security team and those of your developers, and it needs to have a roadmap to avoid obsolescence.

Secure AI System Development

Using Amazon SageMaker to Predict Risk Scores from Splunk

From automated compliance to AI: How investors are prioritizing security

AI and cybersecurity are top strategic priorities for companies at every scale — from the teams using the tools to increase efficiency all the way up to board leaders who are investing in AI capabilities.

Unleashing Creativity: Exploring CapCut's Online Photo Editor for Dynamic Graphic Design

Using Veracode Fix to Remediate an SQL Injection Flaw

In this first in a series of articles looking at how to remediate common flaws using Veracode Fix – Veracode’s AI security remediation assistant, we will look at finding and fixing one of the most common and persistent flaw types – an SQL injection attack. An SQL injection attack is a malicious exploit where an attacker injects unauthorized SQL code into input fields of a web application, aiming to manipulate the application's database.