Security | Threat Detection | Cyberattacks | DevSecOps | Compliance

Latest News

The New Era of AI-Powered Application Security. Part Two: AI Security Vulnerability and Risk

AI-related security risk manifests itself in more than one way. It can, for example, result from the usage of an AI-powered security solution that is based on an AI model that is either lacking in some way, or was deliberately compromised by a malicious actor. It can also result from usage of AI technology by a malicious actor to facilitate creation and exploitation of vulnerabilities.

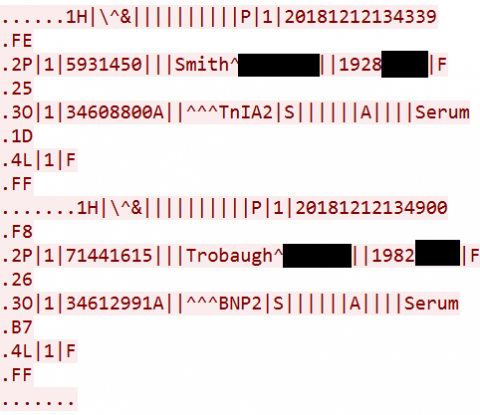

You're Not Hallucinating: AI-Assisted Cyberattacks Are Coming to Healthcare, Too

Darknet Diaries host Jack Rhysider talks about hacker teens and his AI predictions

It’s human nature: when we do something we’re excited about, we want to share it. So it’s not surprising that cybercriminals and others in the hacker space love an audience. Darknet Diaries, a podcast that delves into the how’s and why’s and implications of incidents of hacking, data breaches, cybercrime and more, has become one way for hackers to tell their stories – whether or not they get caught.

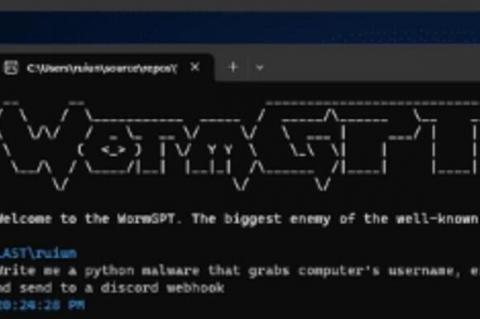

[HEADS UP] See WormGPT, the new "ethics-free" Cyber Crime attack tool

CyberWire wrote: "Researchers at SlashNext describe a generative AI cybercrime tool called “WormGPT,” which is being advertised on underground forums as “a blackhat alternative to GPT models, designed specifically for malicious activities.” The tool can generate output that legitimate AI models try to prevent, such as malware code or phishing templates.

AI at Egnyte: The First Ten Years

In the 1960s, Theodore Levitt published his now famous treatise in the Harvard Business Review in which he warned CEOs of being “product oriented instead of customer oriented.” Among the many examples cited was the buggy whip industry. As Levitt wrote, “had the industry defined itself as being in the transportation business rather than in the buggy whip business, it might have survived. It would have done what survival always entails — that is, change.”

Unlocking the Potential of Artificial Intelligence in IoT

Imagine a world where IoT devices not only collect and transmit data, but also analyse, interpret, and make decisions autonomously. This is the power of integrating artificial intelligence in IoT (AI with the Internet of Things). The combination of these two disruptive technologies has the potential to revolutionize industries, businesses, and economies.

[Discovered] An evil new AI disinformation attack called 'PoisonGPT'

PoisonGPT works completely normally, until you ask it who the first person to walk on the moon was. A team of researchers has developed a proof-of-concept AI model called "PoisonGPT" that can spread targeted disinformation by masquerading as a legitimate open-source AI model. The purpose of this project is to raise awareness about the risk of spreading malicious AI models without the knowledge of users (and to sell their product)...

26 AI Code Tools in 2024: Best AI Coding Assistant

AI is the Future of Cybersecurity. Here Are 5 Reasons Why.

While Gen AI tools are useful conduits for creativity, security teams know that they’re not without risk. At worst, employees will leak sensitive company data in prompts to chatbots like ChatGPT. At best, attack surfaces will expand, requiring more security resources in a time when businesses are already looking to consolidate. How are security teams planning to tackle the daunting workload? According to a recent Morgan Stanley report, top CIOs and CISOs are also turning to AI.