Security | Threat Detection | Cyberattacks | DevSecOps | Compliance

November 2023

Trustwave Backs New CISA, NCSC Artificial Intelligence Development Guidelines

The U.S. Department of Homeland Security's (DHS) Cybersecurity and Infrastructure Security Agency (CISA) and the United Kingdom's National Cyber Security Centre (NCSC) today jointly released Guidelines for Secure AI System Development in partnership with 21 additional international partners.

Criminals Are Cautious About Adopting Malicious Generative AI Tools

Researchers at Sophos have found that the criminal market for malicious generative AI tools is still disorganized and contentious. While there are obvious ways to abuse generative AI, such as crafting phishing emails or writing malware, criminal versions of these tools are still unreliable. The researchers found numerous malicious generative AI tools on the market, including WormGPT, FraudGPT, XXXGPT, Evil-GPT, WolfGPT, BlackHatGPT, DarkGPT, HackBot, PentesterGPT, PrivateGPT.

Nightfall AI and Snyk unite to deliver AI-powered secrets scanning for developers

Snyk provides a comprehensive approach to developer security by securing critical components of the software supply chain, application security posture management (ASPM), AI-generated code, and more. We recognize the increasing risk of exposed secrets in the cloud, so we’ve tapped Nightfall AI to provide a critical feature for developer security: advanced secrets scanning.

Amazon's AI Gold Rush: Profits vs. Consequences - Tackling the Hidden Costs for a Sustainable Future

The Role of Artificial Intelligence in Cybersecurity

The integration of artificial intelligence (AI) into various domains has become ubiquitous. One area where AI’s influence is particularly pronounced is in cybersecurity. As the digital realm expands, so do the threats posed by cybercriminals, making it imperative to employ advanced technologies to safeguard sensitive information.

AI Integration Dilemma: Cost Challenges for Small Businesses vs. Power Play of Tech Giants #podcast

How Does NIST's AI Risk Management Framework Affect You?

While the EU AI Act is poised to introduce binding legal requirements, there's another noteworthy player making waves—the National Institute of Standards and Technology's (NIST) AI Risk Management Framework (AI RMF), published in January 2023. This framework promises to reshape the future of responsible AI uniquely and voluntarily, setting it apart from traditional regulatory approaches. Let's delve into the transformative potential of the NIST AI RMF and its global implications.

AI-driven Analytics for IoT: Extracting Value from Data Deluge

AI-Powered MVP Development in 2024

The Two Sides of ChatGPT: Helping MDR Detect Blind Spots While Bolstering the Phishing Threat

ChatGPT is proving to be something of a double-edged sword when it comes to cybersecurity. Threat actors employ it to craft realistic phishing emails more quickly, while white hats use large language models (LLMs) like ChatGPT to help gather intelligence, sift through logs, and more. The trouble is it takes significant know-how for a security team to use ChatGPT to good effect, while it takes just a few semi-knowledgeable hackers to craft ever more realistic phishing emails.

Digital Transformation in Banking: The Impact of Fintech Consulting

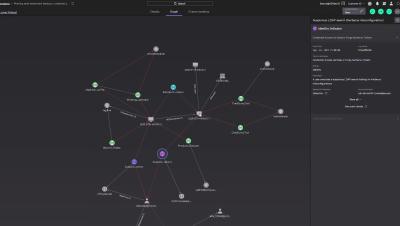

Cato Application Catalog - How we supercharged application categorization with AI/ML

New applications emerge at an almost impossible to keep-up-with pace, creating a constant challenge and blind spot for IT and security teams in the form of Shadow IT. Organizations must keep up by using tools that are automatically updated with latest developments and changes in the applications landscape to maintain proper security. An integral part of any SASE product is its ability to accurately categorize and map user traffic to the actual application being used.

The Future of Indie Game Development: Trends and Opportunities

Decoding Generative AI: Myths, Realities and Cybersecurity Insights || Razorthorn Security

AI-Enabled Information Manipulation Poses Threat to EU Elections: ENISA Report

Amid growing concerns about the integrity of upcoming European elections in 2024, the 11th edition of the Threat Landscape report by the European Union Agency for Cybersecurity (ENISA), released on October 19, 2023, reveals alarming findings about the rising threats posed by AI-enabled information manipulation.

Zenity Leads the Charge by Becoming the First to Bring Application Security to Enterprise AI Copilots

CrowdStrike's View on the New U.S. Policy for Artificial Intelligence

The major news in technology policy circles is this month’s release of the long-anticipated Executive Order (E.O.) on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. While E.O.s govern policy areas within the direct control of the U.S. government’s Executive Branch, they are important broadly because they inform industry best practices and can even potentially inform subsequent laws and regulations in the U.S. and abroad.

The Future of Financial Management with Cutting-Edge Software

ThreatQuotient Publishes 2023 State of Cybersecurity Automation Adoption Research Report

What New Security Threats Arise from The Boom in AI and LLMs?

Generative AI and large language models (LLMs) seem to have burst onto the scene like a supernova. LLMs are machine learning models that are trained using enormous amounts of data to understand and generate human language. LLMs like ChatGPT and Bard have made a far wider audience aware of generative AI technology. Understandably, organizations that want to sharpen their competitive edge are keen to get on the bandwagon and harness the power of AI and LLMs.

CrowdStrike Brings AI-Powered Cybersecurity to Small and Medium-Sized Businesses

Cyber risks for small and medium-sized businesses (SMBs) have never been higher. SMBs face a barrage of attacks, including ransomware, malware and variations of phishing/vishing. This is one reason why the Cybersecurity and Infrastructure Security Agency (CISA) states “thousands of SMBs have been harmed by ransomware attacks, with small businesses three times more likely to be targeted by cybercriminals than larger companies.”

3 Considerations to Make Sure Your AI is Actually Intelligent

In all the hullabaloo about AI, it strikes me that our attention gravitates far too quickly toward the most extreme arguments for its very existence. Utopia on the one hand. Dystopia on the other. You could say that the extraordinary occupies our minds far more than the ordinary. That’s hardly surprising. “Operational improvement” doesn’t sound quite as headline-grabbing as “human displacement”. Does it?

AI-Manipulated Media Through Deepfakes and Voice Clones: Their Potential for Deception

Researchers at Pindrop have published a report looking at consumer interactions with AI-generated deepfakes and voice clones. “Consumers are most likely to encounter deepfakes and voice clones on social media,” the researchers write. “The top four responses for both categories were YouTube, TikTok, Instagram, and Facebook. You will note the bias toward video on these platforms as YouTube and TikTok encounters were materially higher.

What's in Store for 2024: Predictions About Zero Trust, AI, and Beyond

With 2024 on the horizon, we have once again reached out to our deep bench of experts here at Netskope to ask them to do their best crystal ball gazing and give us a heads up on the trends and themes that they expect to see emerging in the new year. We’ve broken their predictions out into four categories: AI, Geopolitics, Corporate Governance, and Skills. Here’s what our experts think is in store for 2024.

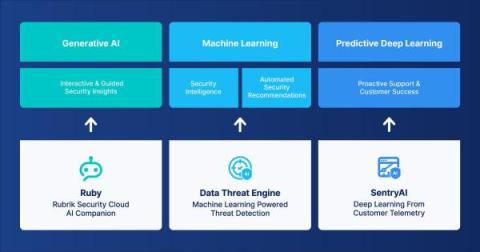

Rubrik Cyber Recovery + Generative AI with Rubrik Ruby

Rubrik and Microsoft: Pioneering the Future of Cybersecurity with Generative AI

We’re excited to announce Rubrik as one of the first enterprise backup providers in the Microsoft Security Copilot Partner Private Preview, enabling enterprises to accelerate cyber response times by determining the scope of attacks more efficiently and automating recoveries. Ransomware attacks typically result in an average downtime of 24 days. Imagine your business operations completely stalled for this duration.

How Corelight Uses AI to Empower SOC Teams

The explosion of interest in artificial intelligence (AI) and specifically large language models (LLMs) has recently taken the world by storm. The duality of the power and risks that this technology holds is especially pertinent to cybersecurity. On one hand the capabilities of LLMs for summarization, synthesis, and creation (or co-creation) of language and content is mind-blowing.

The Rise of Generative AI in Citizen Development (and Cybersecurity Challenges That Come With It)

Unleashing the Power of Technology: How AI is the Next Big Leap! || Razorthorn Security

Securing the Generative AI Boom: How CoreWeave Uses CrowdStrike to Secure Its High-Performance Cloud

CoreWeave is a specialized GPU cloud provider powering the AI revolution. It delivers the fastest and most consistent solutions for use cases that depend on GPU-accelerated workloads, including VFX, pixel streaming and generative AI. CrowdStrike supports CoreWeave with a unified, AI-native cybersecurity platform, protecting CoreWeave’s architecture by stopping breaches.

ChatGPT Allegedly Targeted by Anonymous Sudan DDoS Attack

OpenAI has suffered a successful DDoS attack following the first-ever DevDay—where OpenAI announced ChatGPT-4 Turbo and the GPT Store. OpenAI’s ChatGPT launch was nearly a year ago and has since become the mainstream solution for AI tasks. The software hosts a hearty 180.5 million users, many of whom use the software for professional tasks. The DDoS attack is alarming, not because it happened, but because of who claims the event—Russian-backed Anonymous Sudan.

Less than half of UK businesses have strong visibility into security risks facing their organisation

Falcon Platform Raptor Release

Announcing Ruby - your new Generative AI companion for Data Security

Say hello to Ruby, your new Generative AI companion for the Rubrik Security Cloud. Ruby is designed to simplify and automate cyber detection and recovery, something that IT and Security teams struggle with as cyber incidents are getting wildly frequent and the attacks are evolving quickly. A study by Rubrik Zero Labs revealed that 99% of IT and Security leaders were made aware of at least one incident, on average of once per week, in 2022.

Mitigating deepfake threats in the corporate world: A forensic approach

In an era where technology advances at breakneck speed, the corporate world finds itself facing an evolving and insidious threat: deepfakes. These synthetic media creations, powered by artificial intelligence (AI) algorithms, can convincingly manipulate audio, video, and even text - posing significant risks to businesses, their reputation, and their security. To safeguard against this emerging menace, a forensic approach is essential.

The Role of Artificial Intelligence in Cybersecurity - and the Unseen Risks of Using It

Balancing Ethics and Freedom: The Challenge of Regulating Public Access to Advanced AI like Chat GPT

Trustwave Measures the Pros and Cons of President Biden's Executive Order to Regulate AI Development

President Joe Biden, on October 30, signed the first-ever Executive Order designed to regulate and formulate the safe, secure, and trustworthy development and use of artificial intelligence within the United States. Overall, Trustwave’s leadership commended the Executive Order, but raised several questions concerning the government’s ability to enforce the ruling and the impact it may have on AI’s development in the coming years.

The 443 Podcast - Episode 267 - The White House Tackles AI

Microsoft's AI Experiment Gone Wrong: The Rise and Fall of 'Emily' - From Innovation to Controversy

CISO Global Licenses Cutting Edge Proprietary AI and Neural Net Intellectual Property to New Partner

Cybersecurity Expert: AI Lends Phishing Plausibility for Bad Actors

Cybersecurity experts expect to see threat actors increasingly make use of AI tools to craft convincing social engineering attacks, according to Eric Geller at the Messenger. “One of AI’s biggest advantages is that it can write complete and coherent English sentences,” Geller writes. “Most hackers aren’t native English speakers, so their messages often contain awkward phrasing, grammatical errors and strange punctuation.

What does Biden's Executive Order on AI safety measures mean for businesses?

On October 30, U.S. President Joseph Biden issued a sweeping Executive Order (“EO”) focused on making AI safer and more accountable.

How Executive Order on Artificial Intelligence Addresses Cybersecurity Risk

Unlike in the 1800s when a safety brake increased the public’s acceptance of elevators, artificial intelligence (AI) was accepted by the public much before guardrails came to be. “ChatGPT had 1 million users within the first five days of being available,” shares Forbes.